· release notes · 3 min read

Joule AI Release 1.1.0

Joule now ships with real-time inferencing enabling advanced use cases

Introduction

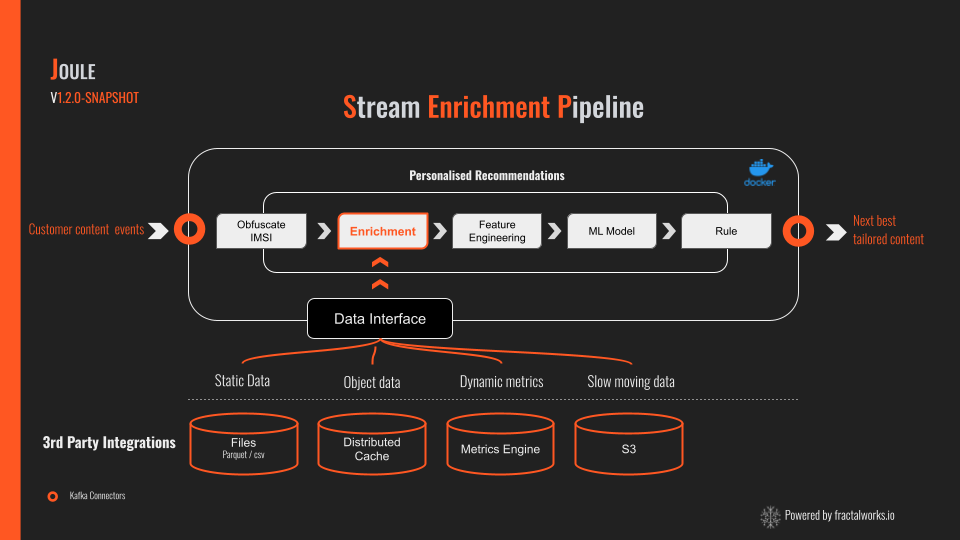

Joule’s latest release offers businesses a comprehensive solution to accelerate use case development to generate value while minimising risk. The platform leverages dynamic ML models, metrics, reference data, and observability to provide real-time actions and insights.

With Joule, businesses can streamline their development efforts and make informed decisions based on data-driven insights. Joule’s intuitive development platform and user-focused design make it easy for businesses to leverage the power of data and maximise their potential.

Features

Predictive Processor

- JPMML model initialisation using local file and remote S3 stores1

- Dynamic model refresh using model update notifications

- Offline prediction auditing that enables explainability, drift monitoring and model retraining

Avro support

- Ability to process avro records for inbound and outbound events

- Complex data types supported using custom mapping

- Schema registry support

Minio S3 Transport

- OOTB multi cloud S3 support

- Publish and consume events and insights to/from hybrid hosted S3 buckets

- Drive pipeline processing using S3 bucket notifications

- Consumer supports following file formats: PARQUET, CSV, ARROW, ORC

- Keep reference data up to date using external systems

Reference Data

- Apply external data within stream processing tasks

- In-memory reference data elements kept up-to-date using source change notifications

- Support for key value and S3 stores

- Reference data file loader utility

Rest Consumer APIs

- File consuming endpoint that enable ease of integration to upstream systems

- Joule event consumer endpoint to provide the ability to chain Joule processors within a cloud environment

File Watcher Consumer

- File watcher that consumes and processes target files

- Supported formats; Parquet, Json, CSV, ORC and Arrow IPC,

Enhancements

Kafka

- Confluent schema registry support for outbound events

- Message partition support

- Confluent and RedPanda support

Enricher processor

- Query optimisation

- SQL, OQL, and Key value enrichment support

General transport

- Improved exception handling to fail on startup

- Strict ordering

Apache Arrow

- Integrated and leveraged to process file efficiently and of various file formats

- Large file processing support

Optimisations

- Processing optimisations that reduce both memory and CPU utilisation while increasing event throughput.

- StreamEvent smart shallow cloning logic to reduce overall memory footprint while providing key data isolation

- StreamEvent change tracking switch to reduce memory overhead

Dependency Upgrades

- Javalin 5.6.3

- Kafka 3.6.0

- Avro 1.11.3

- DuckDB 0.9.2

Bug Fixes

StreamEventCSVDeserializer

- Fixed fields from holding only string values to correctly defined data types

- Allowed for custom date format to be provided

StreamEventJSONDeserializer

- Can now read an array of Json StreamEvent objects

JVM Configuration Additions

- Require ‘— add-opens=java.base/java.nio=ALL-UNNAMED’ to be added to the java CLI due to Apache Arrow requirements

- Applying the G1 GC regionalized and generational garbage collector to improved memory usage

Getting started

To get started, download the following resources to prepare your environment and work through the provided documentation. Feel free to reach out with questions.

- Download the examples code from GitLab

- Pull the latest docker image from DockerHub

- Work through the README file

We’re here to help

Feedback is most welcome including thoughts on how to improve and extend Joule and ideas for exciting use cases.

Join the FractalWorks Community Forum, who openly share ideas, best practices and support each other. Feel free to join us there! And if you have any further questions on how to become a partner or customer of FractalWorks, do not hesitate to engage with us, we will be happy to talk about your needs.